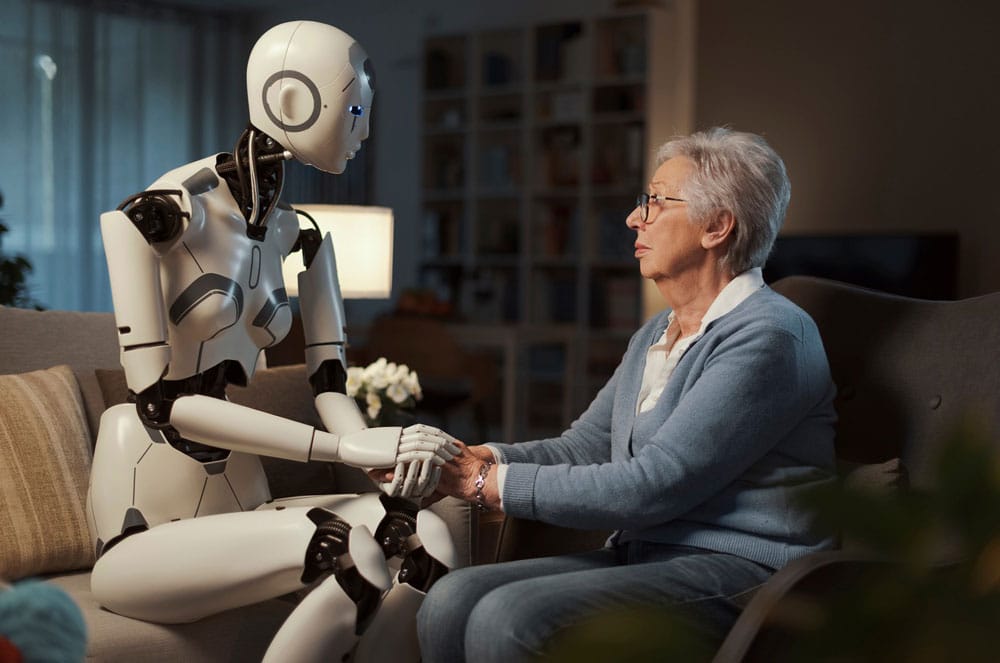

Does the world need AI Psychologists?

Mon 21 Aug 2023

Intersection of AI Hallucinations and Human Perception by Greg Fantham, Assistant Professor at Heriot-Watt University Dubai

The term ‘AI hallucinations’ typically encompasses a variety of ways in which Artificial Intelligence systems generate distortions of reality. These can vary from the extreme instances of fake news articles and untrue statements about research studies or documents related to individuals, past events, or scientific concepts, such as the creation of a medical study with fabricated research, to the many minor errors with which users of ChatGPT are becoming increasingly familiar: factual errors, arbitrary fragments of irrelevant information, and misconstrued instructions. By contrast, human hallucinations tend to be restricted to relatively extreme mental health disorders such as schizophrenia, other neurological conditions, substance abuse, or chronic sleep deprivation for example. Experiences that result from changes in brain activity, particularly in regions that process sensory information, memory, and emotions. It may seem a little harsh, then, to implicate AI’s many minor errors and mistakes as ‘hallucinations’ with extreme instances such as ‘deep fakes’, where AI algorithms are used to manipulate videos or images of real people to create fake content. A little alarmist, perhaps? Perhaps not, since both species of hallucination arise from the same psychological foundations. Some of these were engendered in AI by its human creators, probably by accident, and others are of its own devising. Either way, it could be time to start hiring AI psychologists.

Are AI psychologists the right answer?

One human characteristic that AI inherited from its creators is the compulsion to compose realistic answers to questions regardless of whether they know what they’re talking about. This arises from cognitive biases; those well-trodden mental shortcuts that increase the speed and energy efficiency of human thinking processes, but can lead also to systematic errors. One example is the “availability heuristic” whereby your brain embraces the first answer that springs to mind so long as it seems to fit. To shoot first and ask questions afterwards. A celebrated example is the tendency to answer the question “What is the most dangerous mode of transport?” with the answer “Air” due to the intensity of media attention devoted to the small number of airliner crashes compared with the far greater number of lives lost in road accidents. The images at the forefront of your memory provide the most readily available answers. When there is a choice between investigating the probabilities on the one hand and making an estimate from experience, we tend to go for the latter. And this is exactly what AI’s Large Language Models (LLM) have been trained to do. Given “To be or …”, the LLM could work through the multi-million probabilities of the next few words or take a shortcut through the complete works of Shakespeare, and reply “not to be”. Thus satisfying the human demand for quick and real-feeling answers.

AI hallucinations

Sensory Deprivation experiments originally conducted by psychologists in the 1950s demonstrated in a more extreme form the brain’s tendency to do its own thing in the absence of external stimuli. When humans were deprived of all sensory stimuli for long periods, floating in a tank of warm water, their brains generated the most intense and realistic hallucinations. Similarly, dreams normally occur when the brain is disconnected from many of the body’s motor functions during sleep. AI is also a highly complex self-regulating system, and we should not be surprised that it does its own thing in the absence of external stimuli and generates the creative fabrications of reality that are such a cause for concern. What it’s doing is a mystery, but these random fragments of self-generated stimuli will be key contributors to the creativity we are increasingly demanding from it. We may have to attempt to interpret AI’s dreams from the pieces of evidence that escape from its processes. Just like Freud.

Signs of AI hallucinations

For day-to-day purposes, there are indicators to look for that may suggest the occurrence of AI hallucinations. One way to detect AI hallucinations is to test the system with a variety of inputs. If the system consistently produces incorrect or unusual outputs for certain inputs, this could be a sign of AI hallucinations. Another way to spot AI hallucinations is to examine the patterns and trends in the system’s outputs. If the system consistently produces outputs that are significantly different from the norm, this could indicate the presence of hallucinations. Additionally, it is important to monitor the system’s behaviour over time, as hallucinations may develop gradually over time.

As we have seen, despite the apparent differences, these altered states of perception ‘experienced’ by AI and humans share several common features, underscoring the significance of comprehending and managing hallucinations in both contexts. By exploring these similarities, experts can obtain crucial knowledge of the intricacies of perception, cognition, and the creation of more resilient and dependable AI systems which can lead to revolutionary breakthroughs in the fields of technology and psychology.

About the writer

Greg Fantham is an Associate Professor at

the Heriot-Watt University Dubai

Does the world need AI Psychologists? Does the world need AI Psychologists? Does the world need AI Psychologists?

Read also – AI has generated more photos than 150 years of photography

Apr 29 2024

Apr 29 2024